I wonder if a slow MRP process (especially when using more threads) isn’t caused by:

- Too Many Indexes on One Table

- Too Many Fields in One Index

- Too Few Indexes

-

Locking and Blocking (could be the reason why fewer threads are a lot faster)

Too Many Indexes on One Table: If you look at Epicor’s indexes there are, what seems like, “a lot” of Non-Clustered Indexes on one single table. The more non-clustered indexes you have on a table, the slower your inserts and deletes will go. If you have a table with 10 non-clustered indexes, that’s 10x (or more) writes an insert has to do for all of the data pages involved with each index. (Courtesy Brent Ozar)

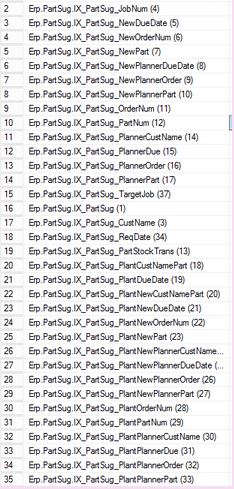

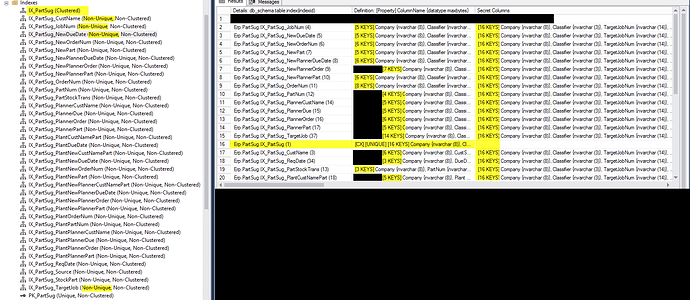

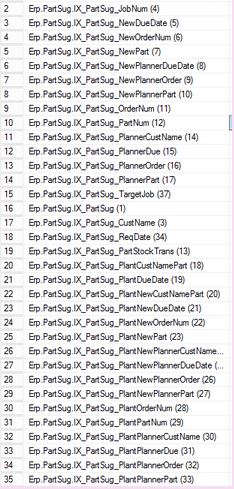

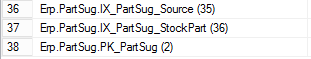

For the PartSug Table (this one causes us problems when we run MRP) there are 36 Indexes (35NC + 1CX):

Can that be considered “a lot”? Brent Ozar has a 5 and 5 rule/suggestion (of course there are exceptions) but he usually recommends 5 indexes on a table and 5 columns per index.

Too Many Fields in One Index: The more columns you add to an index, the “heavier” (GB a page takes up and row count) that index gets. This is particularly important if writing to the table happens. The more indexes I add, the more work my writes have to do. The more columns are in each index, the more that gets magnified.

I hope I undersatnd this correctly but for the PartSug Table all of the Non-Clustered Indexes are Non-Unique which means that SQL Server will add all of the columns from the Clustering Key to the NC Index to make it unique. For this example, the Clustering Key of the Table includes all 16 Columns of the Clustered Index. All 16 of those columns are added to EACH NC Index (duplicates are removed if they are in the NC Index Keys) which means that each of the 35 NC Indexes have at least all 16 Clustered Index Columns in them. And any time any of these 16 Columns need to be changed or a new record added or deleted ALL of the NC Indexes need to be touched.

Locking Blocks: Locking and blocking is a normal and required activity in SQL Server. It happens when two processes (threads) need access to same piece of data concurrently so one process locks the data and the other one needs to wait for the first one to complete and release the lock. As soon as the first process is complete, the blocked process resumes operation. The blocking chain is like a queue: once the blocking process is complete, the next process can continue. In a normal server environment, infrequent blocking locks are acceptable. However, if we execute long, complex transactions that read lots of data, and take a long time to execute, then locks will be held for extended periods, and blocking can become a problem. When blocking occurs for extended time periods, it can impact the performance of many user processes. With blocking, however severe, no error is raised.

DeadLocks: (We are currently experience several Deadlocks on our PartSug table each time we run MRP.) Occurs when one process is blocked and waiting for a second process to complete its work and release locks, while the second process at the same time is blocked and waiting for the first process to release the lock. In a deadlock situation, the processes are already blocking each other so there needs to be an external intervention to resolve the deadlock. For that reason, SQL Server has a deadlock detection and resolution mechanism where one process needs to be chosen as the “deadlock victim” and killed so that the other process can continue working. The victim process receives a very specific error message indicating that it was chosen as a deadlock victim and therefore the process can be restarted.

I just wonder if Too many Indexes on one Table, Too many Fields on one Index and Locking and Blocking are what is slowing MRP down, especially when you have more than a few threads running at one time. In the PartSug example above, if a large number of threads are used to run MRP it could potentially mean that they are all trying to make changes to the 35 NC Indexes at one time. (And this is just ONE Table that I’m looking at!) And if you lower the amount of threads that MRP is running it doesn’t run into as many Locking and Blocking issues and it will run a lot faster. Just a theory of mine. I’m a newbie to all of this so I apologize if any of my information above is wrong and would be interested to know if anyone else has any additional ideas. Thanks!