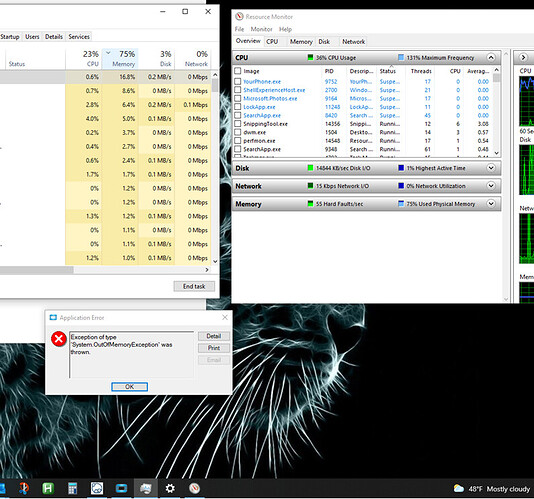

Shoot. We started seeing a similar server side error starting monday after server maint in one of our UBAQs was trending down is it a windows update that caused it.

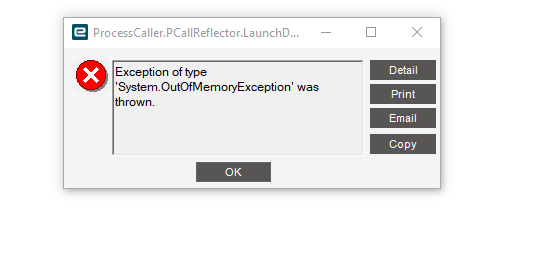

this is our error

Server Side ExceptionUnable to write message due to insufficient memory.Exception caught in: mscorlibError Detail ============Correlation ID: 00000000-0000-0000-0000-000000000000Description: Unable to write message due to insufficient memory.Inner Exception: Exception of type 'System.OutOfMemoryException' was thrown.Program: System.ServiceModel.Internals.dllMethod: AllocateByteArrayOriginal Exception Type: InsufficientMemoryExceptionFramework Method: WriteMessageFramework Line Number: 0Framework Column Number: 0Framework Source: WriteMessage at offset 282 in file:line:column <filename unknown>:0:0Server Trace Stack: at System.Runtime.Fx.AllocateByteArray(Int32 size) at System.Runtime.InternalBufferManager.PooledBufferManager.TakeBuffer(Int32 bufferSize) at System.Runtime.BufferedOutputStream.ToArray(Int32& bufferSize) at System.ServiceModel.Channels.BufferedMessageWriter.WriteMessage(Message message, BufferManager bufferManager, Int32 initialOffset, Int32 maxSizeQuota) at System.ServiceModel.Channels.BinaryMessageEncoderFactory.BinaryMessageEncoder.WriteMessage(Message message, Int32 maxMessageSize, BufferManager bufferManager, Int32 messageOffset) at Epicor.ServiceModel.Channels.CompressionEncoder.WriteMessage(Message message, Int32 maxMessageSize, BufferManager bufferManager, Int32 messageOffset)Inner Trace:Exception of type 'System.OutOfMemoryException' was thrown.: at System.Runtime.Fx.AllocateByteArray(Int32 size)Client Stack Trace ==================

Server stack trace: at Epicor.ServiceModel.Channels.CompressionEncoder.WriteMessage(Message message, Int32 maxMessageSize, BufferManager bufferManager, Int32 messageOffset) at System.ServiceModel.Channels.FramingDuplexSessionChannel.EncodeMessage(Message message) at System.ServiceModel.Channels.FramingDuplexSessionChannel.OnSendCore(Message message, TimeSpan timeout) at System.ServiceModel.Channels.TransportDuplexSessionChannel.OnSend(Message message, TimeSpan timeout) at System.ServiceModel.Channels.OutputChannel.Send(Message message, TimeSpan timeout) at System.ServiceModel.Channels.SecurityChannelFactory`1.SecurityOutputChannel.Send(Message message, TimeSpan timeout) at System.ServiceModel.Dispatcher.DuplexChannelBinder.Request(Message message, TimeSpan timeout) at System.ServiceModel.Channels.ServiceChannel.Call(String action, Boolean oneway, ProxyOperationRuntime operation, Object[] ins, Object[] outs, TimeSpan timeout) at System.ServiceModel.Channels.ServiceChannelProxy.InvokeService(IMethodCallMessage methodCall, ProxyOperationRuntime operation) at System.ServiceModel.Channels.ServiceChannelProxy.Invoke(IMessage message)Exception rethrown at [0]: at System.Runtime.Remoting.Proxies.RealProxy.HandleReturnMessage(IMessage reqMsg, IMessage retMsg) at System.Runtime.Remoting.Proxies.RealProxy.PrivateInvoke(MessageData& msgData, Int32 type) at Ice.Contracts.DynamicQuerySvcContract.RunCustomAction(DynamicQueryTableset queryDS, String actionID, DataSet queryResultDataset) at Ice.Proxy.BO.DynamicQueryImpl.RunCustomAction(DynamicQueryDataSet queryDS, String actionID, DataSet queryResultDataset) at Ice.Adapters.DynamicQueryAdapter.<>c__DisplayClass33_0.<RunCustomAction>b__0(DataSet datasetToSend) at Ice.Adapters.DynamicQueryAdapter.ProcessUbaqMethod(String methodName, DataSet updatedDS, Func`2 methodExecutor, Boolean refreshQueryResultsDataset) at Ice.Adapters.DynamicQueryAdapter.RunCustomAction(DynamicQueryDataSet queryDS, String actionId, DataSet updatedDS, Boolean refreshQueryResultsDataset)Inner Exception ===============Failed to allocate a managed memory buffer of 67108864 bytes. The amount of available memory may be low. at System.Runtime.Fx.AllocateByteArray(Int32 size) at System.Runtime.InternalBufferManager.PooledBufferManager.TakeBuffer(Int32 bufferSize) at System.Runtime.BufferedOutputStream.ToArray(Int32& bufferSize) at System.ServiceModel.Channels.BufferedMessageWriter.WriteMessage(Message message, BufferManager bufferManager, Int32 initialOffset, Int32 maxSizeQuota) at System.ServiceModel.Channels.BinaryMessageEncoderFactory.BinaryMessageEncoder.WriteMessage(Message message, Int32 maxMessageSize, BufferManager bufferManager, Int32 messageOffset) at Epicor.ServiceModel.Channels.CompressionEncoder.WriteMessage(Message message, Int32 maxMessageSize, BufferManager bufferManager, Int32 messageOffset)Inner Exception ===============Exception of type 'System.OutOfMemoryException' was thrown. at System.Runtime.Fx.AllocateByteArray(Int32 size)