Help Hint for you all…

I have been doing some refactoring of BPMs for efficiency. Finding mistakes made from prior sins of myself and others. You can probably find some ways to improve your end user’s experience by doing some of these same changes… note that this refactoring takes about 5 minutes, but saves users lots of frustrations. THIS example is applicable to all users (including Cloud). Also, as an encouragement to those NOT attending Insights… I learned about the need for this trick last year at insights during the E10Help forum meeting. (thank you!)

I assembled this example to demonstrate how a simple mistake can cost seconds of time.

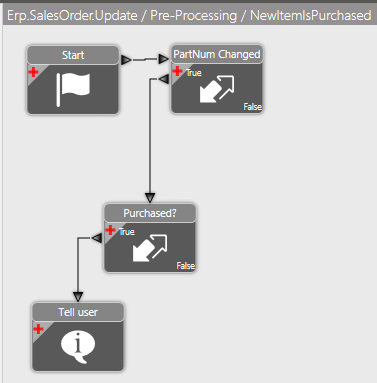

EXAMPLE BPM:

BPM Specification: If a sales order line is entered for a part that is marked as purchased in the part master, throw a message to the screen.

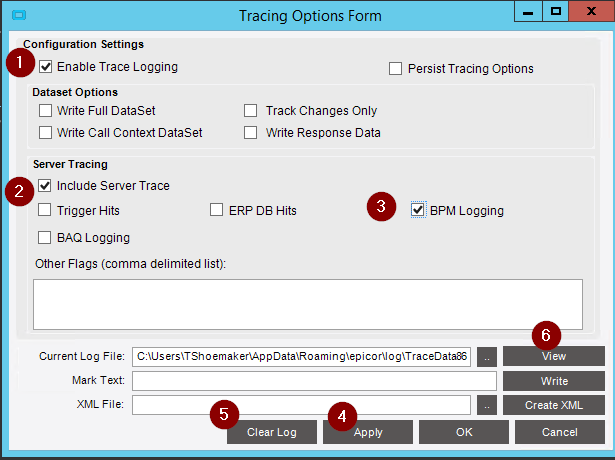

My first version (done the wrong way) takes takes an average of 100 milliseconds per the trace log:

<BpmDirective Type="1" ID="925597c7-0e43-4590-9601-22ac948497cd" Name="NewItemIsPurchased" VisibilityScope="0" Duration="100" />

The NEW version (done better) takes only 2 milliseconds:

<BpmDirective Type="1" ID="925597c7-0e43-4590-9601-22ac948497cd" Name="NewItemIsPurchased" VisibilityScope="0" Duration="2" />

WHY DOES THIS MATTER? You might be thinking “100ms is only 1/10th of a second”… “most of the time” it is not a big deal… BUT in some cases, especially when BPMs run repetitively, this turns into seconds. Example, a 30 line order being imported via DMT, or ECC, or Quote Converstion will turn into at least 3 seconds of wait time. Consider a BPM on OrderRel, and lets pretend you have another BPM that “Firms” all the releases after you are all done entering the order. Your 3 seconds just turned to 6 seconds.

How were these built?

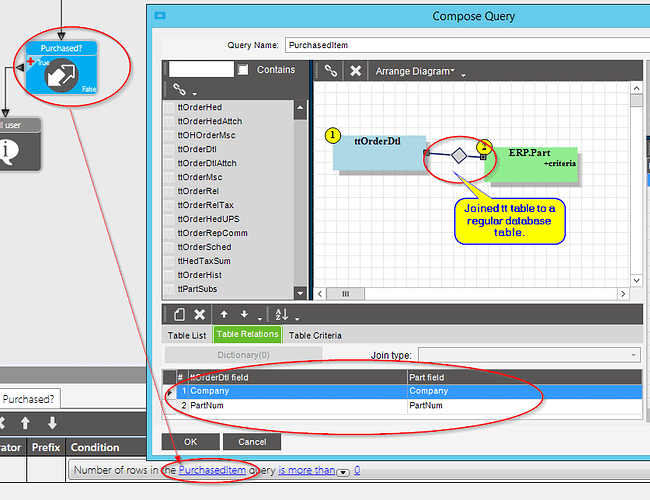

Version 1 (slow) looks like this… the PROBLEM is that it joins ttOrderDtl to Part using a join… THIS IS BAD PRACTICE, and has been discussed in the past on this very forum.

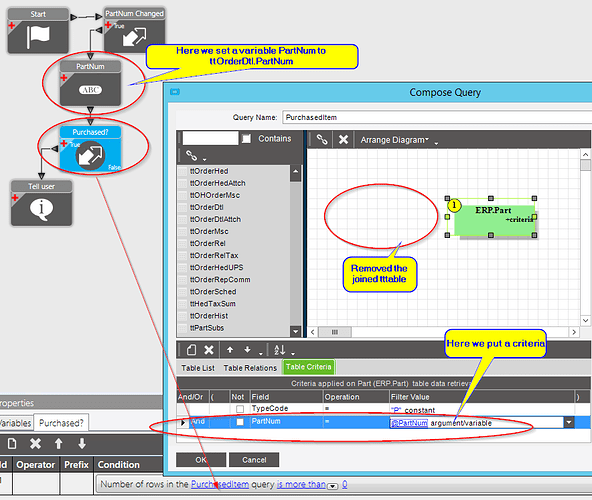

The NEW version eliminates the join… instead, we create a variable to hold the part… populate that variable, and then filter the part using that variable:

IN ADDITION… I will mention that I did try to do the entire check using C# code instead of widgets, and found that it still took 1-2 Milliseconds to run… So… in this case, Widgets win!